Research

Overview

Perception is one of the fundamental challenges of robotics. For a robot to operate in a human environment (instead of a carefully-controlled setting like a factory) it must be able to reason about its surroundings and take them into account when making decisions. The role of perception is to take sensor information and convert it into a form that is useful for higher-level applications. Examples of useful perception algorithms are map building, object classification and face detection to name but a few. Within the ACFR perception algorithms have been developed for applications as diverse as defence, mining, and under-sea surveying.

My research looks at perception in dynamic environments where moving objects such as cars, bicycles, and pedestrians mean that the environment around the robot is constantly changing. Principally, I'm looking at ways to track these objects and understand where they've been, where they are going, and how they are interacting with each other and the environment around them.

What follows is a pretty high-level description of some of my research. If it's too much to read, watch a video instead.

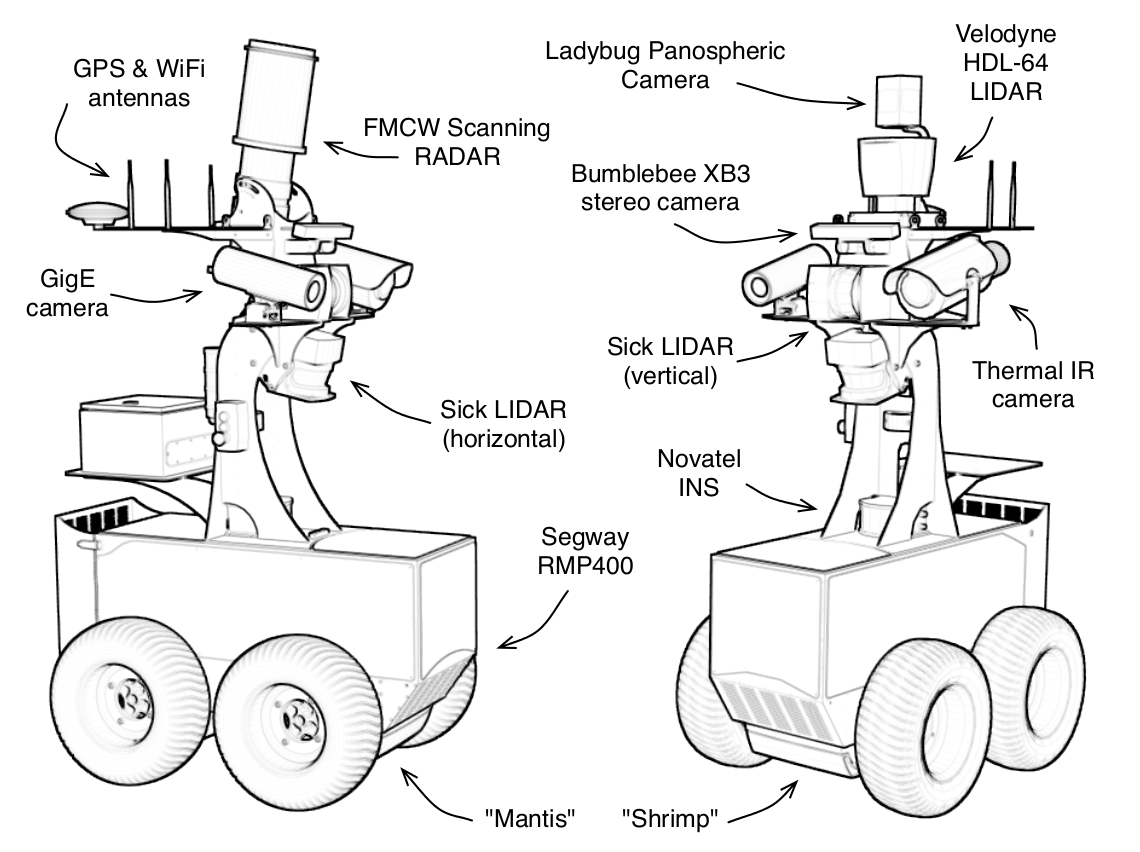

Robots!

"Shrimp" and "Mantis" are our research group's experimental platforms. They carry a cutting-edge payload of sensors (cameras, lasers, radar) and log a ridiculous amount of data (~500 GB/hour or 150MB/sec).

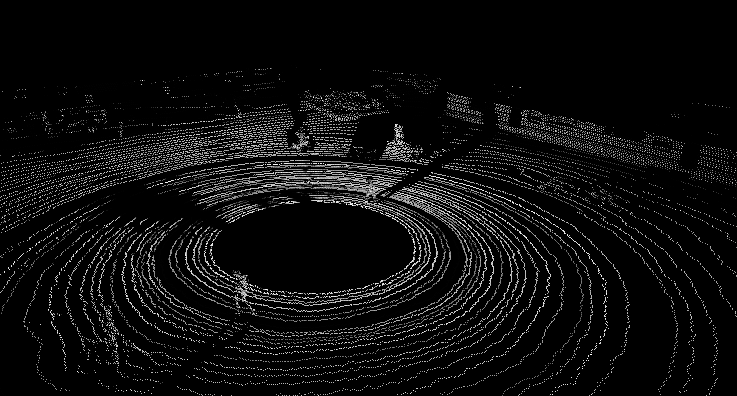

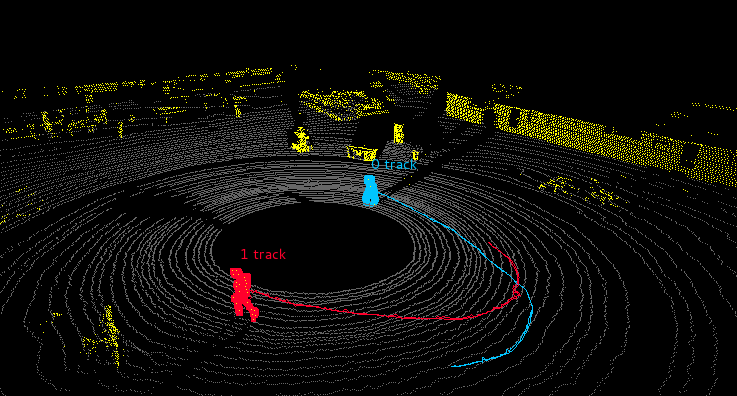

One of the sensors on "Shrimp" is a Velodyne HDL-64E LIDAR. It is a scanning laser sensor that makes over 1 million measurements a second and can be used to map the environment in 3D. An example of some raw Velodyne data is shown below.

Segmentation

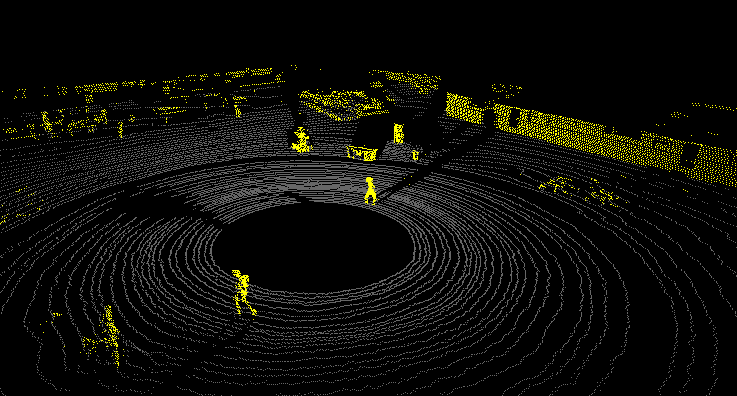

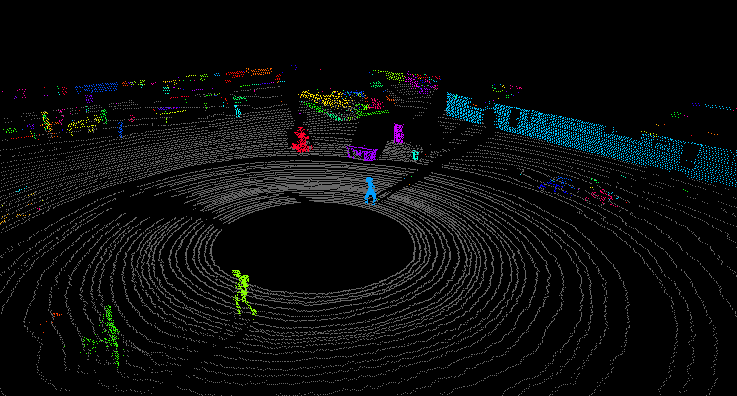

My research group has developed algorithms specifically for segmenting 3D data - i.e. separating objects (such as trees, people, buildings) from the ground. The approach shown here is called "mesh-voxel segmentation" [Douillard2011] and is designed to exploit the scan pattern of the Velodyne sensor. The following image shows ground detection.

After ground detection, objects are clustered into segments through the use of a voxel grid. Now you can more clearly see that there are two pedestrians in the scene, which contains a mostly flat ground, and has some other foreground objects. In the top right corner of the image you can see the wall of a building.

Tracking

Given a good segmentation, there are many methods available for tracking. The work I presented at ACRA'11 [Morton2011] analysed various methods and showed that tracking objects by their centroids alone worked as well as (if not better than) more complex methods which tried to exploit the full 3D information in the scan. The main lesson from this was that if segmentation works, tracking is easy. However when the scene is cluttered, or objects interact with each other, current tracking methods fail. The image below shows the results of basic Kalman Filter tracking of the two people. The "tails" show the paths that the people took as they walked through the scene.

Appearance Modelling

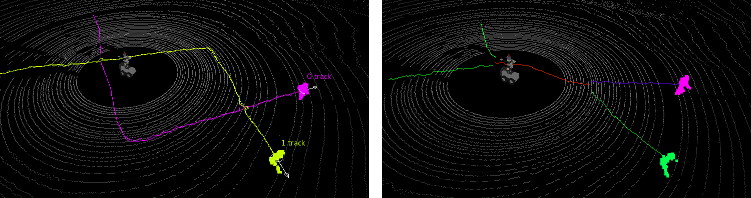

A major limitation of current tracking systems is their inability to deal with "interacting" objects - for example a number of people that come together, form a group, then move apart again. The example below shows two kinds of interactions, one where the objects cross paths quickly and can be tracked with a standard Kalman filter, and the other where the people walk together for a time before separating.

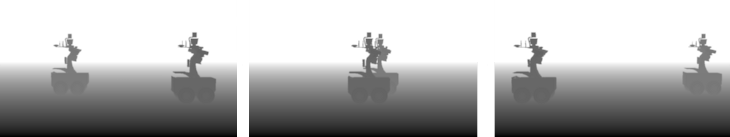

Or consider the scenario below, where two robots are observed by a range sensor before, during and after an interaction. Did the robots switch places, or return to where they began? Using a range sensor alone, the answer is unclear.

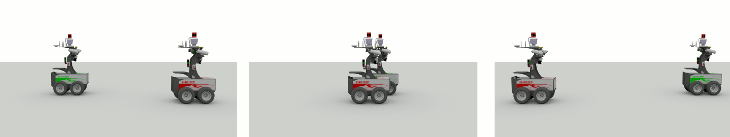

Another sensor, however, may be able to resolve the ambiguity. In this case, a colour camera would be able to tell us that the robots crossed paths.

So whilst a laser sensor is very good for tracking the location of objects in 3D, other sensors (cameras, for instance) may be much better at determining the identity of objects. I'm currently working on methods to combine data from multiple different sensors and make it possible to track objects through complex interactions.